Consistent Character Creation with GPT-4 Omni: Exploring the Capabilities

Explore the powerful capabilities of GPT-4 Omni, OpenAI's latest AI model that can seamlessly integrate audio, vision, and text in real-time interactions. Discover its speed, cost-effectiveness, and ability to create consistent characters across multiple scenes, making it a game-changer for developers and content creators.

February 24, 2025

Discover how the latest advancements in AI, including the release of GPT-4 Omni, are revolutionizing human-computer interactions and opening up new possibilities for creating consistent and engaging digital experiences. This blog post explores the capabilities of this cutting-edge technology and its potential impact on various industries.

Exploring the Capabilities of GPT-4 Omni

Pricing and Cost-Efficiency of GPT-4 Omni

Model Evaluations and Benchmarking

Language Tokenization and Representation

Safety and Limitations of GPT-4 Omni

Availability and Access to GPT-4 Omni

Consistent Character Creation with GPT-4 Omni

Conclusion

Exploring the Capabilities of GPT-4 Omni

Exploring the Capabilities of GPT-4 Omni

OpenAI's recent release of GPT-4 Omni has introduced a powerful model that can reason across audio, vision, and text in real-time. This new model offers several impressive capabilities:

-

Multimodal Interaction: GPT-4 Omni can accept inputs in the form of text, audio, images, and video, and generate outputs in any combination of these modalities. This allows for more natural human-computer interactions.

-

Rapid Response: The model can respond to audio inputs in as little as 232 milliseconds on average, matching the speed of human conversation.

-

Improved Performance: GPT-4 Omni outperforms previous models on various benchmarks, including text evaluation, audio ASR, and audio translation.

-

Cost Efficiency: The new model is 50% cheaper than the previous GPT-4 Turbo, making it more accessible for API users. The free version of ChatGPT now uses GPT-4 Omni, allowing more users to benefit from its capabilities.

The article explores several use cases for GPT-4 Omni, such as real-time translation, point-and-learn language learning, and interactive AI assistants. The model's ability to maintain consistent character representations across multiple scenes is also highlighted as a notable feature.

While the model still has some limitations, such as occasional interruptions in conversational flow, the overall capabilities of GPT-4 Omni represent a significant step forward in natural language processing and multimodal AI.

Pricing and Cost-Efficiency of GPT-4 Omni

Pricing and Cost-Efficiency of GPT-4 Omni

The announcement of GPT-4 Omni brings significant improvements in pricing and cost-efficiency compared to previous models. Some key highlights:

- The input cost has dropped to $0.005 per 1,000 tokens, down from $0.01 for GPT-4 Turbo.

- The output cost is now $0.015 per 1,000 tokens, reduced from $0.03 for GPT-4 Turbo.

- The vision pricing is also much cheaper, making the overall usage of GPT-4 Omni more cost-effective.

- Compared to GPT-3.5 Turbo, GPT-4 Omni offers a 50% price reduction, making it a more accessible option for developers and users.

- The free version of ChatGPT now uses the GPT-4 Omni model, allowing more users to benefit from the improved capabilities and performance at no additional cost.

- With these pricing changes, the article suggests that there is little reason to use the older GPT-4 Turbo model, as GPT-4 Omni provides superior performance and cost-efficiency.

Model Evaluations and Benchmarking

Model Evaluations and Benchmarking

Open AI has put the new GPT-4 Omni model through various benchmark tests to evaluate its performance. The model was compared against other language models such as GPT-4 Turbo, the original GPT-4, Claude 3, Opus Gemini Pro 1.5, Gemini 1.0, and LLaMA 3.

The results show that GPT-4 Omni outperforms almost every other model across different test categories:

- Text Evaluation: GPT-4 Omni achieves the highest scores.

- Audio ASR (Automatic Speech Recognition): GPT-4 Omni outperforms the previous Whisper version 3 model, with lower error rates.

- Audio Translation: GPT-4 Omni beats all the other models in this test.

- M3 Exam Zero-Shot: GPT-4 Omni outperforms the original GPT-4 model.

- Vision Understanding Evaluations: GPT-4 Omni achieves the highest scores on each of these tests.

The article also mentions that the improved language tokenization capabilities of GPT-4 Omni contribute to its cost-effectiveness. Even though the token reduction for English is only 1.1 times, the savings can be significant when scaled across large amounts of text.

Language Tokenization and Representation

Language Tokenization and Representation

The article notes that one of the reasons why GPT-4 Omni is cheaper is its ability to represent languages in fewer tokens. Even though the token count for English has only dropped by 1.1 times, when scaled across hundreds of thousands of words, this can result in significant cost savings.

The article explains that the full sentence that previously took 27 tokens now takes only 24 tokens. This improved language tokenization and representation allows GPT-4 Omni to be more efficient in its usage of tokens, leading to the 50% cost reduction compared to previous models.

The article suggests that this enhanced language modeling capability is a key factor in making GPT-4 Omni a more cost-effective choice for developers and users, especially for applications that involve processing large volumes of text across multiple languages.

Safety and Limitations of GPT-4 Omni

Safety and Limitations of GPT-4 Omni

Like all of their AI models, OpenAI is very focused on the safety and limitations of GPT-4 Omni. The article notes that the model still has some limitations, such as occasionally interrupting conversations and needing to be manually told when the user is done speaking. This is an issue that has persisted even with the improved response times of GPT-4 Omni.

The article also mentions that the model has built-in safety features and limitations to address potential misuse or harmful outputs. However, the specific details of these safety measures are not provided in this section.

Overall, while GPT-4 Omni represents a significant advancement in OpenAI's language models, the company remains cautious and vigilant about the potential risks and limitations of the technology. Ongoing monitoring and refinement of the model's safety features will likely be a priority as it is deployed more widely.

Availability and Access to GPT-4 Omni

Availability and Access to GPT-4 Omni

GPT-4 Omni, the latest flagship model from OpenAI, is now widely available and accessible to users. Here are the key details:

- GPT-4 Omni text and image models are now integrated into the free tier of ChatGPT, allowing all users to access these capabilities.

- The free tier of ChatGPT now has 5 times higher message limits, making it much more accessible for users.

- A new version of voice mode with GPT-4 Omni integration is planned to be rolled out in the next couple of weeks, providing seamless audio-based interactions.

- GPT-4 Omni is available as a standalone text and vision model through the OpenAI API, offering developers twice the speed and half the price compared to the previous GPT-4 Turbo model.

- OpenAI is strongly encouraging all developers to switch to the GPT-4 Omni model, as it has become the recommended choice with virtually no use cases for the older GPT-4 Turbo model.

- The pricing for GPT-4 Omni has been significantly reduced, with the input cost dropping to $0.005 per 1,000 tokens and the output cost at $0.015 per 1,000 tokens, making it more accessible for a wide range of applications.

In summary, the availability and accessibility of GPT-4 Omni have been greatly expanded, with the model being integrated into the free tier of ChatGPT and offered through the OpenAI API at more affordable prices, making it a compelling choice for developers and users alike.

Consistent Character Creation with GPT-4 Omni

Consistent Character Creation with GPT-4 Omni

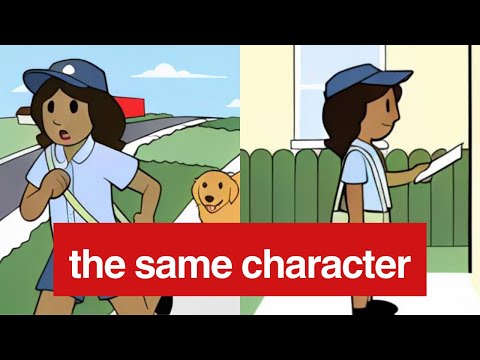

The ability to create consistent characters across multiple scenes is a key capability of the new GPT-4 Omni model. By training on visual inputs, the model can now generate visual outputs that maintain the same character attributes, such as clothing, accessories, and poses, even as the character is placed in different scenarios.

In the examples provided, the model is able to consistently depict the character "Sally" as a smiling mail delivery person, with her bag and uniform remaining the same across different scenes. This is a significant improvement over previous models, which would have to rely on textual descriptions to try to maintain character consistency.

The speed and accuracy of GPT-4 Omni's visual outputs also allow for more seamless and natural interactions, where the model can quickly respond to visual prompts and generate appropriate visual responses. This opens up new possibilities for applications that require consistent character representation, such as interactive storytelling, virtual assistants, and even video game development.

While the examples within the ChatGPT interface may not fully showcase the model's capabilities, the potential for consistent character creation with GPT-4 Omni is clear. Developers can leverage this feature to create more engaging and immersive experiences for users, and further explore the possibilities of multimodal AI systems.

Conclusion

Conclusion

The new GPT-4 Omni model from OpenAI is a significant advancement in natural language processing, combining text, audio, and visual inputs to provide real-time, human-like interactions. The model's impressive performance across various benchmarks, as well as its reduced cost and increased accessibility, make it a compelling choice for developers and users alike.

However, the author's exploration of the model's ability to maintain consistent character representations across multiple scenes highlights the ongoing challenges in this area. While the examples provided in the announcement article suggest the model can preserve visual details, the author's own experiments within the ChatGPT interface suggest that this capability may not be as robust as claimed.

The author's suggestion to test the model's character consistency using the API, rather than the ChatGPT interface, is a valid one, as the latter may be subject to additional safety and moderation constraints that could impact the model's performance. Ultimately, further testing and experimentation will be necessary to fully understand the extent of the model's capabilities in this regard.

Overall, the release of GPT-4 Omni represents an exciting step forward in the field of multimodal AI, and the author's insights provide a valuable perspective on both the model's strengths and the areas that may require further refinement.

FAQ

FAQ